In Build a Go KV Cache from Scratch in 20 minutes, I walked you through what matters when writing a local cache, and eventually implemented one, whose performance was beaten badly by that of the popular go-cache on Github though. However, the bright side is that we can learn a lot from those excellent Github Go cache products, studying their features, applicable scenarios, and implementations, and extracting what we need.

In this article, I will mainly analyze and compare the four cache libraries of go-cache, bigcache, golang-lru, and groupcache, which are all very popular with thousands of users. And also I will list some features of go-generic-cache, which is the only one that supports generics of Go1.8.

Comparison and Assessment

High concurrency

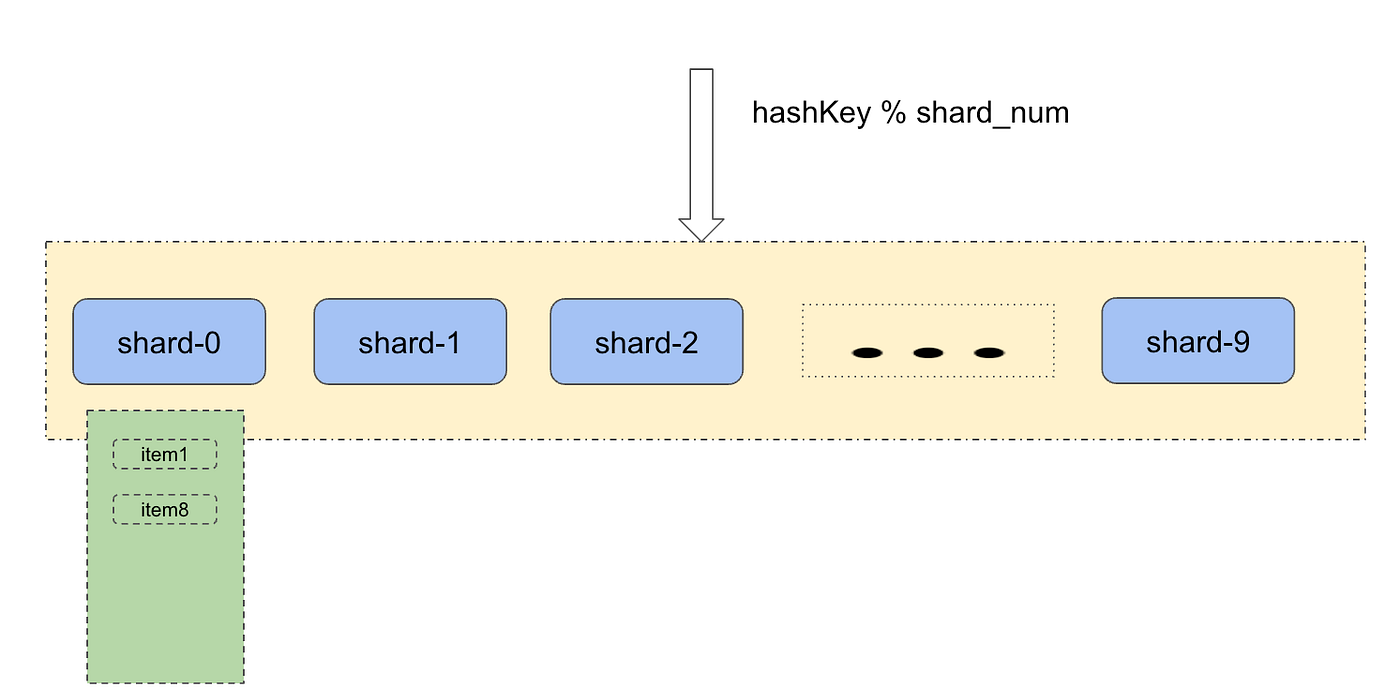

Generally, the cache uses locking in concurrency control. Assuming that there are 100 elements and only one read-write lock, then you have to do it one by one when two elements are to be written at the same time. Low efficiency, right?

So buckets are introduced, dividing the 100 elements into 10 groups, and controlling the 10 buckets with ten read-write locks respectively. In this case, there is only a 1% chance those two elements are in the same bucket at the same time, thus 100 elements can probably write simultaneously, improving the concurrency efficiency by 100 times!

Only go-cache and bigcache support sharding, both supporting a configurable number of buckets and controlling the concurrency of each bucket by sync.RWMutex. Their difference is that go-cache applies djb2 hash algorithm, while bigcache uses FNV. I haven’t done much research on the hash algorithm but can see these two seem not much difference in speed from this StackExchange post.

In contrast, neither groupcache nor golang-lru support sharding.

Data structure

The memories differ between caches, and we will mainly focus on

- Memory overhead. Whether the data structure design is reasonable, and whether additional memory allocation is required to implement some functions.

- Key and value store efficiency.

- GC influence.

Almost all caches depend onmap for storage. Therefore, improving the efficiency of map and avoiding additional GC overhead can make the cache much more efficient.

There are usually several methods.

- Allocate memory outside of the stack.

- Don’t use pointers in map, but only use primitive types like map[int]string. For pointers are often allocated to the heap, not using them is an effective way to stop frequent GC.

- Use simple data types and avoid complex ones to make the map as small as possible. If you are interested, please read runtime: Large maps cause significant GC pauses.

groupcache and golang-lru are exactly the same that they both save the key and value using the List.Element in container/list , and then save <key, list.Element> with map, and save the element to a List to provide the function of LRU.

Each time the cache needs to act twice when Set the key, restricts and deletes old data based on key amount.

As is seen, these two caches are almost identical in the data processing.

For go-cache, there is nothing special in its data structure, which uses the simple map[string]Item , in which Item is a struct containing an interface{} and expire. When the object is too large, go-cache’s performance might be affected by GC.

bigcache is the most distinctive and GC-friendly cache. It designs an underlying storage structure that separates the value from the hashmap, and stores the value in a BytesQueue, while the map only stores the simplest data structure map[uint64]uint32, within which the value is the index position in the queue.

From the above source code, it is easy to conclude that bigcache is the most ingenious in data structure among the four.

Capacity & evict policy

The scalability of the cache’s capacity determines the evict policies. The common evict policy algorithms include FIFO, LRU, etc.

As we all know, neither go-cache nor bigcache sets limits on the number of keys, indefinite addition is allowed, so no evict policy is needed. But the other two have limitations.

golang-lru, only supports a fixed number of keys and the LRU algorithm to eliminate old data. It saves the old data by a double-linked list, and each time it deletes the oldest.

groupcache provides the code for limiting the number of keys, but without a default number, and sets a limit to the total data size, which means the number is unlimited if size allows. Meanwhile, groupcache also eliminates the old data with the double-linked list of the container/list package and the LRU algorithm combined.

ttl

ttl, a feature pursued by many cache users, can save the users from worrying about cache explosion in many cases. And you need to adjust its value according to statistics only when performance issues occur.

golang-lru and groupcache are not ttl supportive, so you have to clear the data manually, or expect the automatic delete of the earliest data when the cache is full. But cache miss will be caused once the cache is inserted frequently.

bigcache supports all keys to be with a unified ttl, which is 10 minutes by default. There are two ways to remove the expired keys.

- Determine whether the oldest key needs to be deleted every time when Set is called.

go-cache supports each key to have a separate ttl by a customized Item object.

Also two methods,

- Janitor, automatically triggers the cleaning method with timer, monitors each key’s expiration time circularly, and deletes the key when it expires.

- Check if the key is expired when calling Get, if so, return nil and delete.

Stats

Whether the stats, including the number of cache hits and the number of requests, are exposed is also what we should consider when evaluating caches.

Both groupcache and bigcache support stats statistics, while the other two don’t.

As to implementation, groupcache only needs a CacheStats to count hits or gets each time Get is called, since it does not support bucketing. But bigcache needs a hashmapStats map for statistics in each cache, and exposes hits and misses data through a Stats.

Standalone

If a cache can support separate deployment, then its tuning, deployment, and performance monitoring become easier.

Only groupcache and bigcache support separate deployment and provide related HTTP interfaces for external access.

bigcache can expose methods likeGET, PUT, and DELETE by starting an httpServer.

groupcache claims to be the replacement of memcached, supporting the requests through the proto buffer protocol as well as httpServer.

Yet, groupcache can’t act as a well-embedded cache. You can only use the LRU cache of it via New, if you don’t use the HTTP interface.

More about groupcache

As an evergreen library with more than 47,000 users, groupcache supports consistent hash as well. It ensures the keys are in order by sorting, and using binary search to keep searching efficient.

In addition, groupcache also has a unique separation of maincache and hotcache, populating the hotkey into the hotcache for query efficiency.

Performance test

package golang_localcache

import (

"fmt"

"math/rand"

"testing"

"time"

"github.com/allegro/bigcache"

_ "github.com/allegro/bigcache"

"github.com/golang/groupcache/lru"

hashicorp "github.com/hashicorp/golang-lru"

gocache "github.com/patrickmn/go-cache"

)

func BenchmarkGoCache(b *testing.B) {

c := gocache.New(1*time.Minute, 5*time.Minute)

b.Run("Put", func(b *testing.B) {

for i := 0; i < b.N; i++ {

c.Add(toKey(i), randomVal(), gocache.DefaultExpiration)

}

})

b.Run("Get", func(b *testing.B) {

for i := 0; i < b.N; i++ {

value, found := c.Get(toKey(i))

if found {

_ = value

}

}

})

}

// bigcache

func BenchmarkBigCache(b *testing.B) {

c, _ := bigcache.NewBigCache(bigcache.DefaultConfig(10 * time.Minute))

b.Run("Put", func(b *testing.B) {

for i := 0; i < b.N; i++ {

c.Set(toKey(i), []byte(fmt.Sprintf("%v", randomVal())))

}

})

b.Run("Get", func(b *testing.B) {

for i := 0; i < b.N; i++ {

value, _ := c.Get(toKey(i))

if value != nil {

_ = value

}

}

})

}

// groupcache

func BenchmarkGroupCache(b *testing.B) {

c := lru.New(1024)

b.Run("Put", func(b *testing.B) {

for i := 0; i < b.N; i++ {

c.Add(toKey(i), randomVal())

}

})

b.Run("Get", func(b *testing.B) {

for i := 0; i < b.N; i++ {

value, _ := c.Get(toKey(i))

if value != nil {

_ = value

}

}

})

}

func BenchmarkGolangLRU(b *testing.B) {

// c := cache2go.Cache("test")

c, _ := hashicorp.New(1024)

b.Run("Put", func(b *testing.B) {

for i := 0; i < b.N; i++ {

c.Add(toKey(i), randomVal())

}

})

b.Run("Get", func(b *testing.B) {

for i := 0; i < b.N; i++ {

value, err := c.Get(toKey(i))

if err == true {

_ = value

}

}

})

}

func toKey(i int) string {

return fmt.Sprintf("item:%d", i)

}

type Val struct {

i int

k string

l []int

b bool

f float64

}

func randomVal() Val {

t := rand.Int()

l := make([]int, 1)

l[0] = t

return Val {

i: rand.Int(),

l: l,

b: true,

f: rand.Float64(),

}

}Val is used as the value of the cache.

- bigcache shows no advantages in either Get or Put. Its GC effect is even worse than go-cache and groupcache.

- groupcache and golang-lru don’t support sharding, but they are the most efficient.

This result has laid a big question mark on the above analysis, but is a good reminder that theory is a theory, and your production environment is the decisive factor when choosing the appropriate cache.

Go generic cache

I add this section because I am interested in whether adding generics to the cache will improve performance and coding experience.

generic-cache uses comparable interface as key type, and supports all kinds of cache algorithms, e.g. FIFO, LRU, LFU, MRU, etc.

Other features

- Implementing simple concurrency control, but no sharding solution provided.

- One key, one ttl, and Set a timer by starting a goroutine to trigger the cleaning. It is maybe the potential bottleneck if the cache capacity reaches a magnitude.

- Building the cache through the container/ring data structure.

- All cache implementations handle values with pointers, which are not GC friendly.

generic-cache is still an experimental product, and its data structures and functions are awaiting to be optimized.

Thanks for reading!

Reference

- patrickmn/go-cache: An in-memory key:value store/cache (similar to Memcached) library for Go, suitable for single-machine applications. (github.com)

- golang/groupcache: groupcache is a caching and cache-filling library, intended as a replacement for memcached in many cases. (github.com)

- allegro/bigcache: Efficient cache for gigabytes of data written in Go. (github.com)

- hashicorp/golang-lru at 80c98217689d6df152309d574ccc682b21dc802c (github.com)

Note: The post is authorized by original author to republish on our site. Original author is Stefanie Lai who is currently a Spotify engineer and lives in Stockholm, original post is published here.