Keeping on learning Kubernetes piece by piece and having a deeper understanding of its advantages, I am no longer shocked at its rapid development and popularity.

Though backed by big companies like Google is undoubtedly the push, its design, features, and convenience are the biggest attraction. Most of all, it disintegrates the monolithic Internet system governance and lifecycle and offers a new management method.

Kubernetes is a set of concepts, including various resource types like Pod, Deployment, control plane, and different components like Node, APIServer, etcd, etc., which may take years to absorb them all. So I picked a start point that suited me best, that is, the flexibility of Kubernetes.

As we know, Kubernetes cannot be so successful if not the countless users and a large number of developers engaging in developing around its ecosystem. Thanks to its architectural design that provides exceptionally flexible interface definitions, community developers can customize related logic around these interfaces to accommodate a more comprehensive user group and accelerate the promotion of Kubernetes.

Kubernetes’s announcement on abandoning Docker made it a big hit in the first few months after its release, with numberless people around discussing it, and the term CRI was made instantly known to people. I added, at that time, “understanding CRI” into my TO-DO list.

This article describes Kubernetes Controller Runtime from the below three aspects.

- Container Runtime, including High-level and Low-level, as well as their various implementations and comparison

- CRI interface and OCI standard

- Kubernetes multi-container runtime

Container Runtime History

Container runtime sits at the bottom of Kubernetes architecture and defines how Pods and its containers run programs.

It has been enlarging its capability, including isolating runtime env, allocating computing resources, organizing hardware, managing images, starting and stopping programs, etc.

However, before version 1.5, there were only two built-in container runtimes, Docker and rkt, which failed in meeting all users’ demands. For example, Kubernetes allocates CPU and memory resources for Linux machines’ apps using systemd and Cgroup. Then what about the Windows machines? Cgroup doesn’t work anymore, and a container runtime that implements CRI under Windows systems is needed. You were facing the same predicament in OS like Centos, macOS, Debian, etc.

Furthermore, the code by then obviously did not meet the Kubernetes’ principle of flexibility.

Then came the abstract OCI design, overcoming these two shortcomings and bringing significant changes to the container runtime development.

- Decouple kubelet and actual container runtime implementation, accelerating iteration

- Give community developers the right to customize implementations. As long as the CRI interface specification is implemented, those implementations can be connected to the Kubernetes platform and provide users with container services.

Container Runtime

Currently, popular container runtimes, like Docker, containerd, CRI-O, Frakti, pouch, and lxc, etc., provide various functions for different scenarios. They can be connected to the kubelet and complete the work by implementing any CRI interface.

To be more intuitive, let’s further subdivide these implementations into high-level container runtime and low-level container runtime.

- High-level container runtime is to implement the CRI interface, dock with kubelet, and call the lower-level OCI interface to complete management tasks, such as image management, gRPC API, etc. It can also be considered as shim.

- Low-level container runtime focuses more on using underlying technologies, like achieving the management tasks required by OCI with CGroup, and providing different emphasis, such as the more security-oriented gVisor.

Some of the most common container runtimes are:

High-level Container Runtime

The main contribution of the high-level Container Runtime is connecting to kubelet and implementing the functions inside the CRI interface. Then, what is the CRI interface, and what it contains?

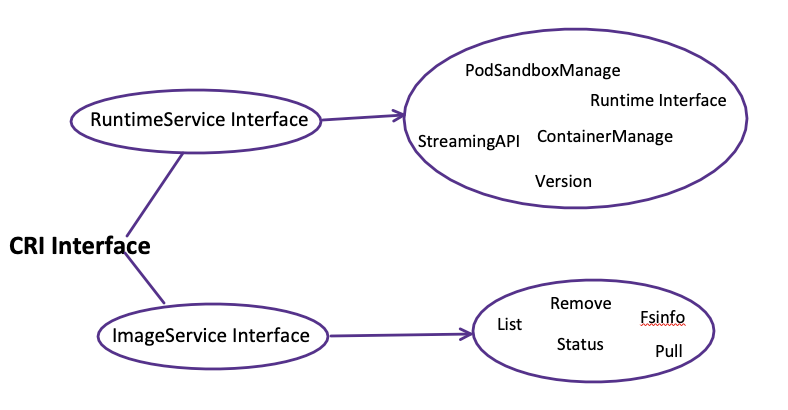

The CRI interface consists of two parts. One is the container runtime service(RuntimeService), which manages the life cycle of pods and containers. While the other is the image service(ImageService), accountable for managing the images’ life cycle.

To dig into their characteristics, pros and cons, let’s take a close look at some popular high-level container runtime frameworks for illustration.

Containerd

Extracted from the early docker source code, it is also the current industry-standard container runtime. Its features are,

- Simplicity, robustness, and portability

- Being able to act as a daemon for Linux and Windows

- Management of the complete container life cycle of the host system

- Mirror transmission and storage

- Container execution and monitoring

- Support of multiple runtimes

Containerd is highly modular, with all modules loaded in the form of RPC service and pluggable. The plug-ins are interdependence through declarations therein and fulfill unified loading by the containerd core, enabling users to write plug-ins for extra functions with Go. Besides, this design makes containerd cross-platform and be easily embedded into other software components.

CRI-O

CRI-O is the CRI implementation provided by Kubernetes.

- Use Docker as a lightweight alternative to Kubernetes runtime.

- Allow Kubernetes to use any OCI-compliant runtime as the container runtime for running Pod, and support runc and Kata Containers as the container runtime, but any runtime that conforms to OCI can be inserted in principle.

- Support OCI container images and can be pulled from any container registry.

- Support CNI integration.

Below is its architectural design.

PouchContainer

PouchContainer is Alibaba’s open-source container engine, inside which the CRI protocol layer and cri-manager module implement the CRI shim function. Its advantages include:

- Strong isolation. The security features are hypervisor-based container technology, lxcfs, directory disk quotas, Linux kernel patch, etc.

- P2P-image-based distribution. It transfers images between nodes, easing the image warehouse’s download pressure and speeding up image downloading.

- No intrusion to business. PouchContainer’s containers also have operation and maintenance suites, system services, and systemd process stewards besides running business applications themselves.

Frakti

Frakti is a container runtime based on Hypervisor, aiming at providing higher security and isolation.

Frakti serves as a CRI container runtime server. Its endpoint should be configured while starting kubelet. In the deployment, hyperd is also required as the API wrapper of runV. — from offical doc

The Choice of High-level Container Runtime

Among the above four, containerd and CRI-O are the most popular high-level container runtime, when the other two are slowly disappearing over time. They have lost their advantages with containerd strengthening its cross-platform capabilities and support for Kubernetes (CRI plug-ins, added in 2018). The latest release of PouchContainer was in January 2019, and Frakti in November 2018.

As to containerd and CRI-O, their difference in function and design makes them level pegging. For example, containerd does not have a default implementation of container network and container application state storage, while CRI-O can achieve with CNI plug-ins. Moreover, containerd’s RPC communication is slower compared to CRI-O’s direct call of internal function. However, the containerd community is more active and in a good development momentum and has a larger user group than CRI-O. For example, Firecracker (AWS open-source, lightweight runv) enables containerd to manage Firecracker via firecracker-containerd project.

Low-level Container Runtime

Generally, low-level container runtime refers to an implementation that can receive a runnable file system (rootfs) and a configuration file (config.json), and run an isolated process, complying with OCI specification.

So what is the OCI specification? Let’s take a quick read.

The Open Container Initiative (OCI) is a lightweight, open governance structure (project), formed under the auspices of the Linux Foundation for the express purpose of creating open industry standards around container formats and runtime. The OCI was launched on June 22nd, 2015, by Docker, CoreOS, and other leaders in the container industry.

- Runtime-spec obtains configuration files and gets things ready for running containers.

- Image-spec defines the standardized structure of the image, including config file, manifest file, file directory structure, etc.

List some commonly used low-level Container Runtime.

- runc: A container runtime we are familiar with most, whose perfect example is the implementation of Docker.

- runV: It is a runtime based on the Hypervisor (OCI), isolating the container from the host by virtualizing the guest kernel, making the boundary clearer. This method easily strengthens the security of both the host and the container.

- gVisor: It improves safety on runc’s basis and isolates containers by the sandbox, but is relatively more expensive.

- wasm: The sandbox mechanism brings it better isolation and higher security than Docker, and it is most probably the future direction.

According to the underlying container operating environment, we usually divide the container runtime into the following three categories:

- OSContainerRuntime

- HyperRuntime

- UnikernelRuntime

See their difference.

The Linux Containers in OSContainerRuntime share the Linux kernel and isolate process resources with technologies like namespace and Cgroup.

Applications in the container access system resource the same way as the regular applications and directly make system calls to the host kernel. Yet, the only six isolations (UTS, IPC, PID, Network, Mount, User) in the namespace cannot work for all Linux resources.

Each VM container in HyperRuntime has an independent Linux kernel, facilitating more thorough, safer, and more performance-consuming resource isolation than Linux containers.

The containers in UnikernelRuntime are in the same high-level security operating environment as the VM Containers. Though employing Hypervisor technology for container isolation, Unikernel is more streamlined than the Linux kernel, and no more redundant drivers, services, etc. You can get a smaller final image with a faster start, but you have to go with its disadvantages: some Linux-dependent tools are not available, and debugging is more troublesome.

How to Choose the Appropriate Low-level Container Runtime?

Runc is still the most reliable choice if there is no special requirement, but runv wins sometimes. See the two specific scenarios below, for instance.

- A multi-tenant environment requires higher isolation.

- Financial systems have relatively high-security requirements.

Nowadays, many cloud vendors provide container instances and serverless using runv.

The low-level runtimes are lightweight, flexible but have apparent limitations. For example, Runc can only support Linux but not provide persistence support. Therefore, you can only obtain complete support for CRI when using high-level container runtime and low-level container runtime in combination.

Kubernetes Multi-Container Runtime

I mentioned a feature in the high-level container runtime part that containerd supports multi-container runtime, which is easy to understand. Besides the ones offered by cloud service providers, container services can also be customized on the public cloud platform, making it possible that runc runs some containers, and runv runs the unreliable ones, therefore in better isolation and higher security.

Kubenetes provides a resource configuration RuntimeClass that can meet this requirement in version 1.12. Finish configuration in the following three steps.

- Set up the container runtime of Node

To set containerd, you can modify the handler name [plugins.cri.containerd.runtimes.${HANDLER_NAME}] in /etc/containerd/config.toml

- Define RuntimeClass

- Select the desired container runtime by configuring the PodSpec runtimeClassName

In the end

All above is just a piece of what container runtime means to Kubernetes and some popular implementations. There are definitely more to explore in the future, like how containerd works internally, how to write my own container runtime, etc.

Note: The post is authorized by original author to republish on our site. Original author is Stefanie Lai who is currently a Spotify engineer and lives in Stockholm, original post is published here.